In the last two decades, cloud computing has revolutionized the way businesses manage data, services, and applications. Alongside it, local storage solutions have provided organizations with a controlled environment to store their data physically. However, as the digital landscape continues to evolve, the limitations of centralized systems are becoming more apparent. Issues such as security risks, data ownership concerns, and a reliance on centralized entities are prompting a shift toward more decentralized solutions.

This article explores the emerging paradigm of decentralized software, a movement that goes beyond traditional cloud and local storage models. By leveraging decentralized networks and distributed technologies like blockchain, edge computing, and peer-to-peer (P2P) systems, decentralized software provides more robust, secure, and scalable alternatives to legacy infrastructures. We will examine how decentralized systems operate, their advantages over traditional methods, their potential applications, and the challenges they face.

What is Decentralized Software?

Decentralized software refers to applications and systems that distribute data processing and storage across multiple nodes rather than relying on a single centralized server or data center. This distribution minimizes single points of failure, enhances security, and provides greater control to end users. Decentralized software often relies on peer-to-peer (P2P) networks, blockchain technology, and edge computing to operate efficiently.

At its core, decentralization means that no single entity or organization controls the entire system. Instead, power is distributed across participants, often incentivized by the system itself. This is in stark contrast to cloud solutions, where the service provider owns and controls the infrastructure, and local storage solutions, where the infrastructure is physically controlled by the organization.

Chapter 1: The Evolution of Data Storage and Management

The Traditional Approach: Centralized Systems

In the past, businesses and individuals relied heavily on centralized data storage solutions. This often meant hosting applications and data on internal servers or using cloud services provided by companies like Amazon Web Services (AWS), Microsoft Azure, or Google Cloud. The model is straightforward: users interact with servers that are managed by a third party or internally, which hold the data and perform necessary operations.

While centralized systems have enabled businesses to scale quickly, they come with distinct drawbacks:

- Security vulnerabilities: Data stored on centralized servers are prime targets for cyberattacks. A breach can compromise vast amounts of sensitive information.

- Data ownership: Users must trust service providers with their data, often lacking visibility into how it’s stored, accessed, or processed.

- Single points of failure: If a data center or server fails, the entire service can go down, causing significant disruptions.

The Rise of Decentralization

In response to the limitations of centralized systems, the world began exploring decentralized alternatives. The rise of technologies like blockchain and peer-to-peer networking allowed for the creation of systems where data was distributed, often cryptographically protected, and more resilient to attacks or failures.

Early forms of decentralized systems, such as BitTorrent and cryptocurrency networks like Bitcoin, demonstrated the potential for decentralized software to function effectively at scale. These early adopters showed that decentralized models could provide trust and security without relying on central authorities.

As the demand for privacy, transparency, and security increased, decentralized software began gaining traction in various industries, including finance (through decentralized finance or DeFi), data storage, and content distribution.

Chapter 2: Key Components of Decentralized Software

Blockchain Technology

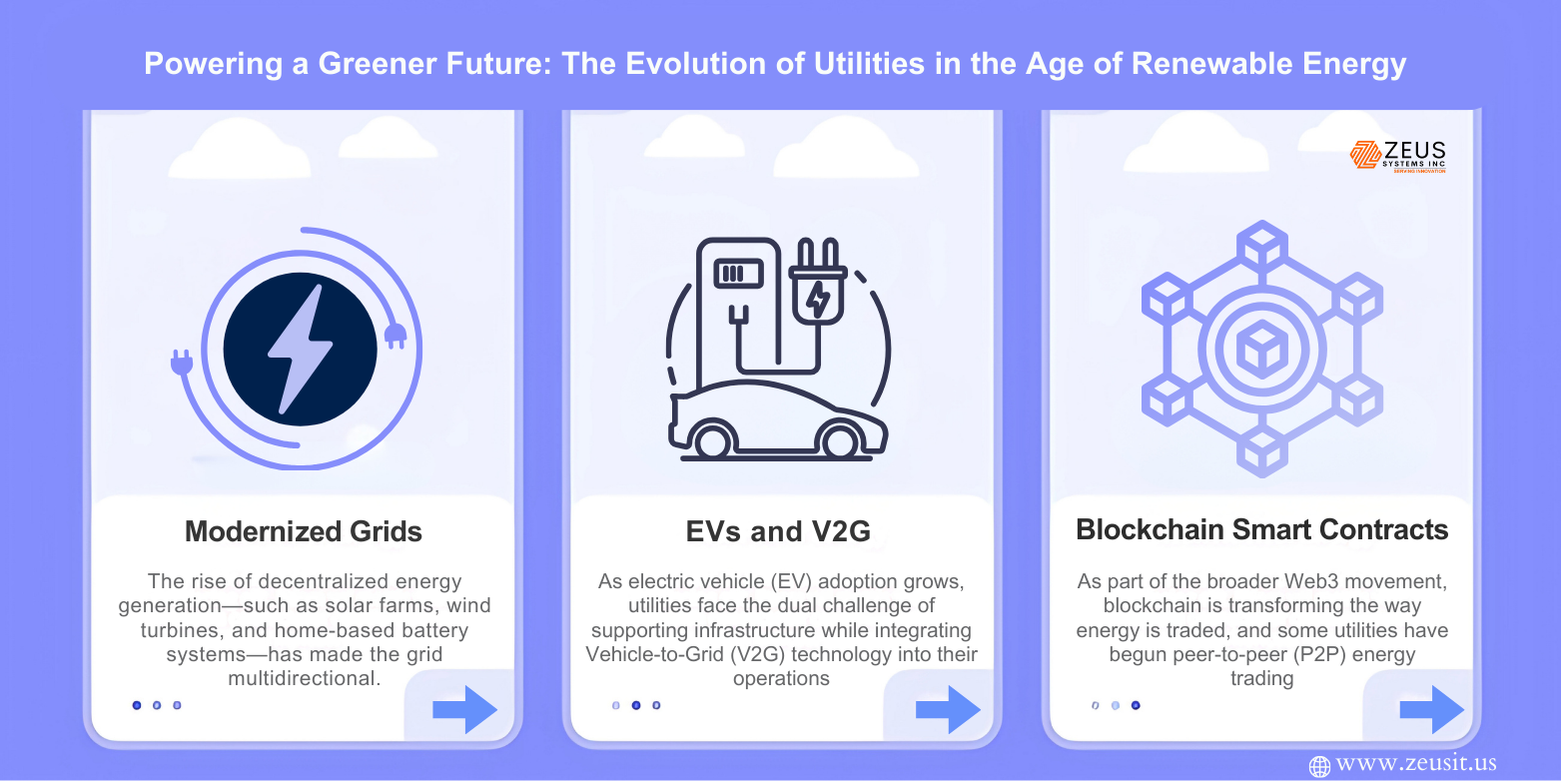

Blockchain is perhaps the most well-known technology associated with decentralization. It is a distributed ledger that records transactions across multiple computers in a way that ensures data integrity, security, and transparency. Each “block” contains a list of transactions, and these blocks are linked together to form a chain.

In the context of decentralized software, blockchain provides several critical features:

- Immutability: Once data is written to a blockchain, it cannot be altered, ensuring a permanent and auditable record of transactions.

- Decentralized trust: Blockchain does not require a central authority to validate transactions, as the network of participants verifies and reaches consensus on the legitimacy of transactions.

- Smart contracts: Decentralized applications (DApps) built on blockchain platforms like Ethereum leverage smart contracts—self-executing contracts that automatically enforce the terms of an agreement.

Blockchain has many use cases, including decentralized finance (DeFi), supply chain tracking, and even identity management. These applications demonstrate how blockchain technology provides a secure and transparent method of handling sensitive data.

Peer-to-Peer (P2P) Networks

Another foundational technology behind decentralized software is peer-to-peer (P2P) networking. In a P2P network, each participant (or node) acts as both a client and a server, sharing resources like data, processing power, or storage with other participants. This contrasts with the client-server model, where a central server handles all data and requests from clients.

P2P networks enable:

- Data sharing: Instead of relying on a central server, P2P networks allow users to share files and resources directly with one another, reducing dependency on central infrastructure.

- Resilience: Because there is no central point of failure, P2P networks are highly resistant to outages and attacks.

- Decentralized applications: Many decentralized apps (DApps) are built on P2P networks, where users interact directly with one another, removing intermediaries.

Technologies such as IPFS (InterPlanetary File System) and BitTorrent are well-known examples of P2P systems, with use cases in decentralized storage and content distribution.

Edge Computing

Edge computing refers to processing data closer to the source of generation, rather than relying on centralized cloud servers. This distributed model allows for faster data processing, reduces latency, and lowers the risk of data breaches by keeping sensitive information closer to its origin.

In a decentralized context, edge computing complements other distributed technologies by enabling local devices to process and store data. This is particularly useful in environments where real-time processing and low-latency responses are critical, such as in autonomous vehicles, IoT devices, or industrial automation systems.

Chapter 3: Advantages of Decentralized Software

Enhanced Security and Privacy

One of the most compelling reasons to adopt decentralized software is the improvement in security and privacy. In centralized systems, data is stored in a single location, making it an attractive target for cybercriminals. If the centralized server is compromised, all the data could be exposed.

Decentralized systems mitigate these risks by distributing data across multiple locations and encrypting it. With technologies like blockchain, data integrity is preserved through cryptographic techniques, making it incredibly difficult for bad actors to alter or manipulate records. Furthermore, decentralized software typically enables users to retain ownership and control over their data, providing a greater level of privacy compared to centralized services.

Reduced Dependency on Centralized Entities

Centralized systems create dependency on service providers or a central authority. In the case of cloud services, users must trust the cloud provider with their data and services. Moreover, they are often subject to the provider’s policies and uptime guarantees, which can change unpredictably.

Decentralized software removes this dependency. It gives users more control over their infrastructure and data. In some cases, decentralized software can even function autonomously, eliminating the need for intermediaries entirely. For instance, decentralized finance (DeFi) protocols allow users to perform financial transactions without relying on banks or payment processors.

Improved Resilience and Availability

Centralized systems are vulnerable to failures due to technical issues, cyberattacks, or natural disasters. Data centers can go offline, causing significant disruptions. In a decentralized system, the distribution of data and services across multiple nodes makes the system more resilient to such failures. Even if one node or network segment goes down, the rest of the system can continue functioning.

Additionally, decentralized software typically offers better uptime and availability due to its distributed nature. This is especially important for mission-critical applications, where downtime can result in lost revenue or productivity.

Cost Efficiency and Scalability

Decentralized systems can be more cost-effective than traditional models in several ways. For example, decentralized storage systems, like the InterPlanetary File System (IPFS), use unused storage capacity on participants’ devices, which reduces the need for expensive centralized storage infrastructure. Additionally, decentralized systems tend to scale more easily because they leverage the computing and storage power of distributed nodes, rather than requiring centralized data centers to expand their infrastructure.

Chapter 4: Use Cases and Applications of Decentralized Software

Decentralized Storage

One of the most notable applications of decentralized software is in the realm of storage. Traditional cloud storage providers like AWS, Google Drive, or Dropbox rely on centralized servers to store users’ data. In contrast, decentralized storage platforms like IPFS and Filecoin allow users to store and share files across a distributed network of nodes.

The advantages of decentralized storage include:

- Increased privacy and security: Data is encrypted and distributed across multiple nodes, making it more resistant to hacks.

- Redundancy and availability: Data is stored in multiple locations, reducing the risk of data loss.

- Lower costs: By utilizing spare storage on other devices, decentralized storage platforms can offer lower fees than traditional providers.

Decentralized Finance (DeFi)

DeFi refers to financial services that are built on decentralized networks, allowing users to perform financial transactions without the need for intermediaries such as banks, payment processors, or insurance companies. DeFi platforms are typically built on blockchain networks like Ethereum, enabling the creation of smart contracts that automate financial operations like lending, borrowing, trading, and staking.

By eliminating intermediaries, DeFi platforms offer several benefits:

- Lower transaction fees: Without intermediaries, users can avoid high fees associated with traditional financial systems.

- Increased accessibility: Anyone with an internet connection can access DeFi platforms, democratizing access to financial services.

- Transparency and security: Blockchain technology ensures that all transactions are transparent and immutable, reducing the risk of fraud.

Distributed Computing and Cloud Alternatives

Distributed computing, enabled by decentralized software, is transforming cloud computing alternatives. While cloud computing requires large data centers and powerful central servers, decentralized computing leverages the idle processing power of individual devices, creating a global “supercomputer.” Platforms like Golem and iExec enable users to rent out unused computing power, allowing decentralized applications to scale without relying on centralized infrastructure.

Decentralized cloud alternatives can:

- Reduce reliance on centralized data centers: By utilizing the processing power of distributed nodes, decentralized cloud solutions can operate without the need for massive server farms.

- Increase privacy: Data can be processed locally, reducing the need to trust third-party cloud providers with sensitive information.

Chapter 5: Challenges and Limitations of Decentralized Software

Scalability Issues

While decentralized software offers numerous advantages, scalability remains a challenge. As the number of nodes in a network grows, the complexity of managing and coordinating these nodes increases. Additionally, decentralized networks often face performance issues related to latency and data throughput.

In the case of blockchain, scalability is particularly challenging. Public blockchains like Ethereum are often criticized for their slow transaction speeds and high fees, especially when the network is congested.

Regulatory and Legal Considerations

The decentralized nature of these systems presents challenges for regulators and lawmakers. Many decentralized systems operate without a central entity to hold accountable, which can complicate legal compliance, such as data protection regulations (e.g., GDPR) or financial laws.

Additionally, decentralized software often operates across multiple jurisdictions, which can create legal conflicts over issues like data sovereignty, intellectual property, and taxation.

User Adoption and Education

Decentralized software often requires users to understand more technical concepts, such as blockchain, smart contracts, and cryptographic key management. This learning curve can hinder widespread adoption, particularly for non-technical users. Furthermore, the decentralized nature of these systems may require users to take more responsibility for their own security and privacy, which can be daunting for those accustomed to the convenience of centralized services.

Chapter 6: The Future of Decentralized Software

Integration with AI and IoT

As the Internet of Things (IoT) and artificial intelligence (AI) continue to evolve, decentralized software will play a pivotal role in managing the massive amounts of data generated by these technologies. AI and machine learning models can be deployed across decentralized networks, enabling more efficient processing and real-time decision-making.

IoT devices, meanwhile, can leverage decentralized infrastructure to reduce the burden on centralized cloud services, ensuring that data is processed closer to its source and allowing for faster responses.

The Role of Governments and Enterprises

While decentralized software offers significant benefits, it is unlikely to replace traditional systems entirely. Governments and large enterprises may continue to rely on centralized systems for critical infrastructure, due to regulatory requirements and the scale at which they operate. However, we may see more hybrid models emerge, where decentralized and centralized systems work together to provide the best of both worlds.

The Ongoing Evolution

Decentralized software is still in its early stages, but the pace of innovation is rapid. As new technologies and protocols emerge, the landscape of decentralized software will continue to evolve. Whether it’s improving scalability, enhancing user experience, or overcoming regulatory hurdles, the future of decentralized software looks promising as it begins to play a more significant role in the digital economy.

Conclusion

Decentralized software offers a transformative shift beyond traditional cloud and local storage solutions. By leveraging distributed networks, blockchain, and edge computing, decentralized software provides enhanced security, privacy, and resilience. The applications are vast, ranging from storage and finance to cloud alternatives and distributed computing.

While challenges such as scalability and regulatory concerns remain, the ongoing evolution of decentralized technologies promises a future where users have more control over their data and digital lives. As the adoption of decentralized systems grows, businesses and individuals will need to adapt, embracing the potential of a more decentralized and user-empowered digital ecosystem.