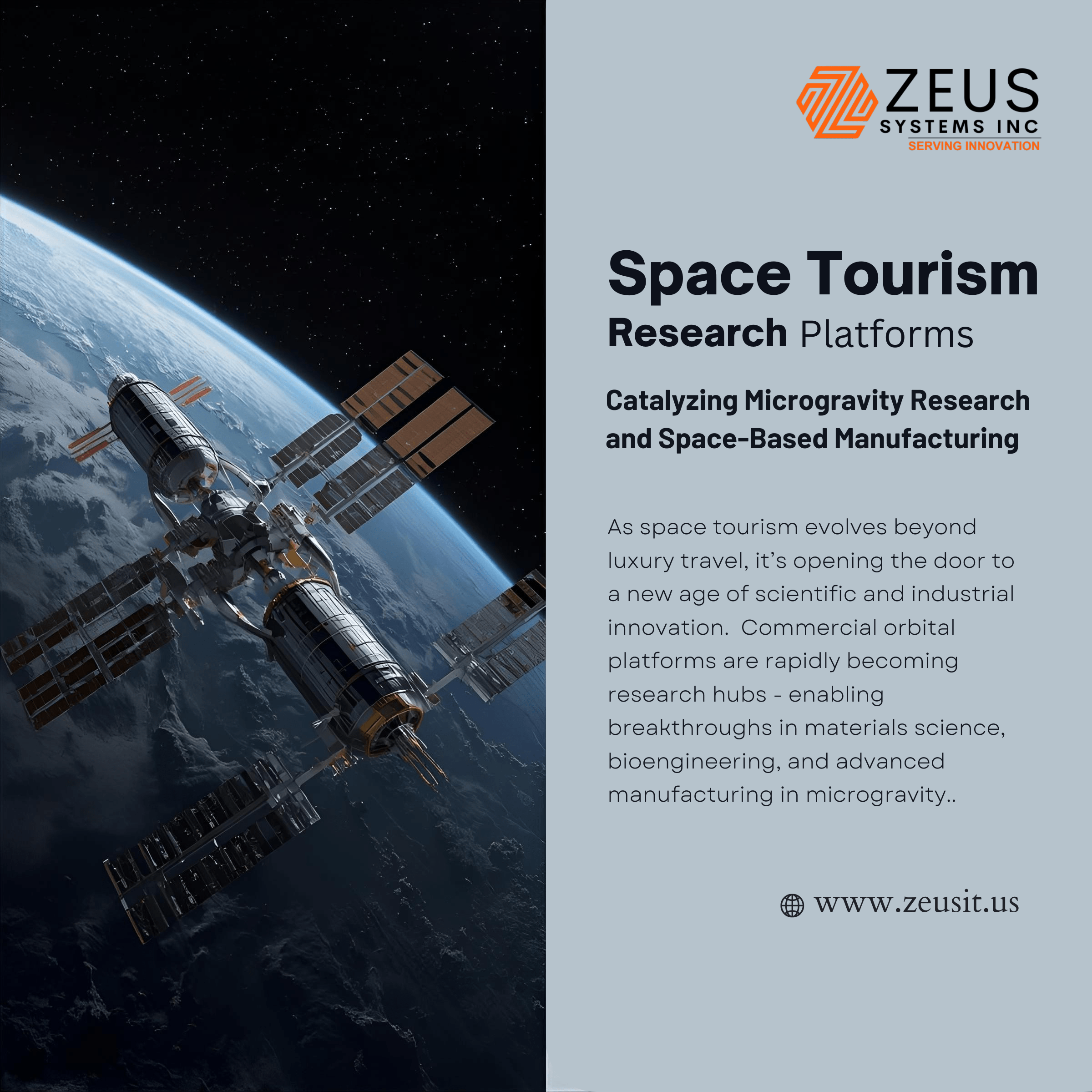

Introduction: Space Tourism’s Hidden Role as Research Infrastructure

The conversation about space tourism has largely revolved around spectacle – billionaires in suborbital joyrides, zero-gravity selfies, and the nascent “space-luxury” market.

But beneath that glitter lies a transformative, under-examined truth: space tourism is becoming the financial and physical scaffolding for an entirely new research and manufacturing ecosystem.

For the first time in history, the infrastructure built for human leisure in space – from suborbital flight vehicles to orbital “hotels” – can double as microgravity research and space-based production platforms.

If we reframe tourism not as an indulgence, but as a distributed research network, the implications are revolutionary. We enter an era where each tourist seat, each orbital cabin, and each suborbital flight can carry science payloads, materials experiments, or even micro-factories. Tourism becomes the economic catalyst that transforms microgravity from an exotic environment into a commercially viable research domain.

1. The Platform Shift: Tourism as the Engine of a Microgravity Economy

From experience economy to infrastructure economy

In the 2020s, the “space experience economy” emerged Virgin Galactic, Blue Origin, and SpaceX all demonstrated that private citizens could fly to space.

Yet, while the public focus was on spectacle, a parallel evolution began: dual-use platforms.

Virgin Galactic, for instance, now dedicates part of its suborbital fleet to research payloads, and Blue Origin’s New Shepard capsules regularly carry microgravity experiments for universities and startups.

This marks a subtle but seismic shift:

Space tourism operators are becoming space research infrastructure providers even before fully realizing it.

The same capsules that offer panoramic windows for tourists can house micro-labs. The same orbital hotels designed for comfort can host high-value manufacturing modules. Tourism, research, and production now coexist in a single economic architecture.

The business logic of convergence

Government space agencies have always funded infrastructure for research. Commercial space tourism inverts that model: tourists fund infrastructure that researchers can use.

Each flight becomes a stacked value event:

- A tourist pays for the experience.

- A biotech startup rents 5 kg of payload space.

- A materials lab buys a few minutes of microgravity.

Tourism revenues subsidize R&D, driving down cost per experiment. Researchers, in turn, provide scientific legitimacy and data, reinforcing the industry’s reputation. This feedback loop is how tourism becomes the backbone of the space-based economy.

2. Beyond ISS: Decentralized Research Nodes in Orbit

Orbital Reef and the new “mixed-use” architecture

Blue Origin and Sierra Space’s Orbital Reef is the first commercial orbital station explicitly designed for mixed-use. It’s marketed as a “business park in orbit,” where tourism, manufacturing, media production, and R&D can operate side-by-side.

Now imagine a network of such outposts — each hosting micro-factories, research racks, and cabins — linked through a logistics chain powered by reusable spacecraft.

The result is a distributed research architecture: smaller, faster, cheaper than the ISS.

Tourists fund the habitation modules; manufacturers rent lab time; data flows back to Earth in real-time.

This isn’t science fiction — it’s the blueprint of a self-sustaining orbital economy.

Orbital manufacturing as a service

As this infrastructure matures, we’ll see microgravity manufacturing-as-a-service emerge.

A startup may not need to own a satellite; instead, it rents a few cubic meters of manufacturing space on a tourist station for a week.

Operators handle power, telemetry, and return logistics — just as cloud providers handle compute today.

Tourism platforms become “cloud servers” for microgravity research.

3. Novel Research and Manufacturing Concepts Emerging from Tourism Platforms

Below are several forward-looking, under-explored applications uniquely enabled by the tourism + research + manufacturing convergence.

(a) Microgravity incubator rides

Suborbital flights (e.g., Virgin Galactic’s VSS Unity or Blue Origin’s New Shepard) provide 3–5 minutes of microgravity — enough for short-duration biological or materials experiments.

Imagine a “rideshare” model:

- Tourists occupy half the capsule.

- The other half is fitted with autonomous experiment racks.

- Data uplinks transmit results mid-flight.

The tourist’s payment offsets the flight cost. The researcher gains microgravity access 10× cheaper than traditional missions.

Each flight becomes a dual-mission event: experience + science.

(b) Orbital tourist-factory modules

In LEO, orbital hotels could house hybrid modules: half accommodation, half cleanroom.

Tourists gaze at Earth while next door, engineers produce zero-defect optical fibres, grow protein crystals, or print tissue scaffolds in microgravity.

This cross-subsidization model — hospitality funding hardware — could be the first sustainable space manufacturing economy.

(c) Rapid-iteration microgravity prototyping

Today, microgravity research cadence is painfully slow: researchers wait months for ISS slots.

Tourism flights, however, can occur weekly.

This allows continuous iteration cycles:

Design → Fly → Analyse → Redesign → Re-fly within a month.

Industries that depend on precise microfluidic behavior (biotech, pharma, optics) could iterate products exponentially faster.

Tourism becomes the agile R&D loop of the space economy.

(d) “Citizen-scientist” tourism

Future tourists may not just float — they’ll run experiments.

Through pre-flight training and modular lab kits, tourists could participate in simple data collection:

- Recording crystallization growth rates.

- Observing fluid motion for AI analysis.

- Testing materials degradation.

This model not only democratizes space science but crowdsources data at scale.

A thousand tourist-scientists per year generate terabytes of experimental data, feeding machine-learning models for microgravity physics.

(e) Human-in-the-loop microfactories

Fully autonomous manufacturing in orbit is difficult. Human oversight is invaluable.

Tourists could serve as ad-hoc observers: documenting, photographing, and even manipulating automated systems.

By blending human curiosity with robotic precision, these “tourist-technicians” could accelerate the validation of new space-manufacturing technologies.

4. Groundbreaking Manufacturing Domains Poised for Acceleration

Tourism-enabled infrastructure could make the following frontier technologies economically feasible within the decade:

| Domain | Why Microgravity Matters | Tourism-Linked Opportunity |

| Optical Fibre Manufacturing | Absence of convection and sedimentation yields ultra-pure ZBLAN fibre | Tourists fund module hosting; fibres returned via re-entry capsules |

| Protein Crystallization for Drug Design | Microgravity enables larger, purer crystals | Tourists observe & document experiments; pharma firms rent lab time |

| Biofabrication / Tissue Engineering | 3D cell structures form naturally in weightlessness | Tourism modules double as biotech fab-labs |

| Liquid-Lens Optics & Freeform Mirrors | Surface tension dominates shaping; perfect curvature | Tourists witness production; optics firms test prototypes in orbit |

| Advanced Alloys & Composites | Elimination of density-driven segregation | Shared module access lowers material R&D cost |

By embedding these manufacturing lines into tourist infrastructure, operators unlock continuous utilization — critical for economic viability.

A tourist cabin that’s empty half the year is unprofitable.

But a cabin that doubles as a research bay between flights?

That’s a self-funding orbital laboratory.

5. Economic and Technological Flywheel Effects

Tourism subsidizes research → Research validates manufacturing → Manufacturing reduces cost → Tourism expands

This positive feedback loop mirrors the early days of aviation:

In the 1920s, air races and barnstorming funded aircraft innovation; those same planes soon carried mail, then passengers, then cargo.

Space tourism may follow a similar trajectory.

Each successful tourist flight refines vehicles, reduces launch cost, and validates systems reliability — all of which benefit scientific and industrial missions.

Within 5–10 years, we could see:

- 10× increase in microgravity experiment cadence.

- 50% cost reduction in short-duration microgravity access.

- 3–5 commercial orbital stations offering mixed-use capabilities.

These aren’t distant projections — they’re the next phase of commercial aerospace evolution.

6. Technological Enablers Behind the Revolution

- Reusable launch systems (SpaceX, Blue Origin, Rocket Lab) — lowering cost per seat and per kg of payload.

- Modular station architectures (Axiom Space, Vast, Orbital Reef) — enabling plug-and-play lab/habitat combinations.

- Advanced automation and robotics — making small, remotely operable manufacturing cells viable.

- Additive manufacturing & digital twins — allowing designs to be iterated virtually and produced on-orbit.

- Miniaturization of scientific payloads — microfluidic chips, nanoscale spectrometers, and lab-on-a-chip systems fit within small racks or even tourist luggage.

Together, these developments transform orbital platforms from exclusive research bases into commercial ecosystems with multi-revenue pathways.

7. Barriers and Blind Spots

While the vision is compelling, several under-discussed challenges remain:

- Regulatory asymmetry: Commercial space labs blur categories — are they research institutions, factories, or hospitality services? New legal frameworks will be required.

- Down-mass logistics: Returning manufactured goods (fibres, bioproducts) safely and cheaply is still complex.

- Safety management: Balancing tourists’ presence with experimental hardware demands new design standards.

- Insurance and liability models: What happens if a tourist experiment contaminates another’s payload?

- Ethical considerations: Should tourists conduct biological experiments without formal scientific credentials?

These issues require proactive governance and transparent business design — otherwise, the ecosystem could stall under regulation bottlenecks.

8. Visionary Scenarios: The Next Decade of Orbit

Let’s imagine 2035 — a timeline where commercial tourism and research integration has matured.

Scenario 1: Suborbital Factory Flights

Weekly suborbital missions carry tourists alongside autonomous mini-manufacturing pods.

Each 10-minute microgravity window produces batches of microfluidic cartridges or photonic fibre.

The tourism revenue offsets cost; the products sell as “space-crafted” luxury or high-performance goods.

Scenario 2: The Orbital Fab-Hotel

An orbital station offers two zones:

- The Zenith Lounge — a panoramic suite for guests.

- The Lumen Bay — a precision-materials lab next door.

Guests tour active manufacturing processes and even take part in light duties.

“Experiential research travel” becomes a new industry category.

Scenario 3: Distributed Space Labs

Startups rent rack space across multiple orbital habitats via a unified digital marketplace — “the Airbnb of microgravity labs.”

Tourism stations host research racks between visitor cycles, achieving near-continuous utilization.

Scenario 4: Citizen Science Network

Thousands of tourists per year participate in simple physics or biological experiments.

An open database aggregates results, feeding AI systems that model fluid dynamics, crystallization, or material behavior in microgravity at unprecedented scale.

Scenario 5: Space-Native Branding

Consumer products proudly display provenance: “Grown in orbit”, “Formed beyond gravity”.

Microgravity-made materials become luxury status symbols — and later, performance standards — just as carbon-fiber once did for Earth-based industries.

9. Strategic Implications for Tech Product Companies

For established technology companies, this evolution opens new strategic horizons:

- Hardware suppliers:

Develop “dual-mode” payload systems — equally suitable for tourist environments and research applications. - Software & telemetry firms:

Create control dashboards that allow Earth-based teams to monitor microgravity experiments or manufacturing lines in real-time. - AI & data analytics:

Train models on citizen-scientist datasets, enabling predictive modeling of microgravity phenomena. - UX/UI designers:

Design intuitive interfaces for tourists-turned-operators — blending safety, simplicity, and meaningful participation. - Marketing and brand storytellers:

Own the emerging narrative: Tourism as R&D infrastructure. The companies that articulate this story early will define the category.

10. The Cultural Shift: From “Look at Me in Space” to “Look What We Can Build in Space”

Space tourism’s first chapter was about personal achievement.

Its second will be about collective capability.

When every orbital stay contributes to science, when every tourist becomes a temporary researcher, and when manufacturing happens meters away from a panoramic window overlooking Earth — the meaning of “travel” itself changes.

The next generation won’t just visit space.

They’ll use it.

Conclusion: Tourism as the Catalyst of the Space-Based Economy

The greatest innovation of commercial space tourism may not be in propulsion, luxury design, or spectacle.

It may be in economic architecture — using leisure markets to fund the most expensive laboratories ever built.

Just as the personal computer emerged from hobbyist garages, the space manufacturing revolution may emerge from tourist cabins.

In the coming decade, space tourism research platforms will catalyze:

- Continuous access to microgravity for experimentation.

- The first viable space-manufacturing economy.

- A new hybrid class of citizen-scientists and orbital entrepreneurs.

Humanity is building the world’s first off-planet innovation network — not through government programs, but through curiosity, courage, and the irresistible pull of experience.

In this light, the phrase “space tourism” feels almost outdated.

What’s emerging is something grander:A civilization learning to turn wonder into infrastructure.